All Deep Dives

.png)

Note: Originally published by Lightwork co-founder Andy Bromberg on his personal site (www.andybromberg.com) on December 24, 2025, and resposted here for our readers.

The discourse around EMFs (electromagnetic fields) and whether they are harmful to humans is a mess, tainted by poorly-communicated conclusions, bad studies, and more.

And to be fair, it's a tough thing to pin down. We're talking about an exposure that:

If you're not familiar with what EMFs are from a conceptual / scientific perspective, I'd recommend reading my earlier post, "A rational, skeptical, curious person's guide to EMFs". Ideas from that piece will be referenced throughout.

This post series is meant to be a rigorous breakdown of the current state of scientific understanding around the health effects of EMFs.

Or, at least, it's meant to represent what my understanding of the current state of scientific understanding is. Please do reach out with any thoughts and especially any corrections. I am not a professional scientist. But I've tried to reason about this from first principles and examine the evidence honestly and openly.

It is long. I'm sorry. But I'm trying to do right by what is a very complex topic, while also making it a bit more readable than most journal articles.

Asking "are EMFs bad for you?" is not a question that leads to well-bounded, falsifiable conclusions. We need to define what we're even talking about, what is the specific, testable question we are looking to answer?, something that is often lost in the noise of EMF debates.

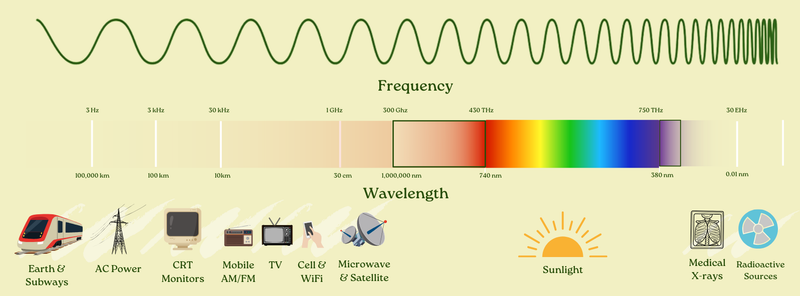

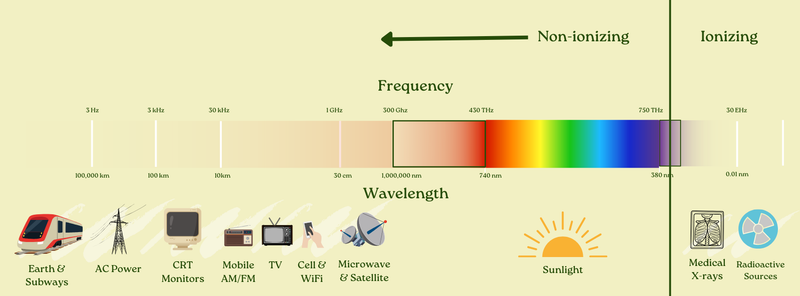

First: the electromagnetic spectrum is wide. Which parts of it are we talking about?

There is no dispute that higher-frequency EMFs like x-rays and gamma rays can cause harm, but they do so through directly breaking chemical bonds. This class of EMFs is called "ionizing radiation" and is known to be harmful through this mechanism. But the ionization capacity of EMFs stops around a roughly 120nm wavelength, or the extreme end of the UV part of the spectrum. To the left of that (longer wavelength) there is not ionization potential.

Moving further down the frequency range from x-rays, there's also little-to-no dispute that visual light can have biological impacts, including negative ones if used "improperly", think about blue light disrupting circadian rhythm.

There's also no dispute that even lower-frequency EMFs can be harmful if they are at high enough power density to cause "thermal effects." Think about a microwave oven (roughly the same type of EMF as Wi-Fi signals, albeit at much higher power): obviously if you put yourself in a microwave, the result wouldn't be pretty. That's because your tissue would actually heat up, which can clearly be damaging.

These undisputed "thermal effects" are the basis for much of the US regulatory regime (the history of which I wrote about here). We are generally protected from these thermal effects by regulation.

So really, what we're talking about is whether non-ionizing EMFs at power levels too low to cause thermal effects can have deleterious impact on humans.

The place to focus is on the two most controversial and debated common types of exposure:

And within these, what is most of interest is exposure at typical levels and durations.

In Part I of this post, I will focus only on RF-EMF. Part II will cover ELF-MF.

We also must consider who it is that we're talking about. An exposure doesn't need to negatively impact everyone for it to be considered "bad." If we were to find out that typical levels of RF-EMF were negatively impacting babies, or immunocompromised people, we'd want to know that, even if it was fine for another chunk of the population.

And so perhaps the precise question to answer is something like: is there reason to believe that RF-EMF, at exposure levels and durations typical in modern life, may cause increased risk of adverse health effects, at least for sensitive members of the population?

If you're like most educated people, your prior on "EMFs are harmful" is pretty low. Mine was too. Here's what my arguments were before I got into the literature:

Each of these arguments has something to it, and should be factored into our view of the corpus of evidence. However, to spoil my conclusion, I've come to believe that none are a silver bullet that totally proves the case against EMF harm. They should be weighed, but they do not end the discussion (and we will cover each in detail later).

Before I send you down a long (~25,000 word?) rabbithole, I'll share my bottom line conclusions on the basis of the evidence. Here they are:

The weight of scientific evidence supports that there is reason to believe that RF-EMF, at exposure levels typical in modern life, may increase the risk of adverse health effects.

But also: there is immense uncertainty, and it is not clear how applicable historical studies are to our modern exposure levels given constantly changing characteristics of the exposure profile (this could cut either way, positive or negative).

We are effectively running an uncontrolled experiment on the world population with waves of new technology which have no robust evidence of safety.

If you believe new exposures should be demonstrated to be safe before being rolled out to you, you should take precautions around RF-EMF exposures (which have not been demonstrated to be safe).

If you only believe precautions are warranted for exposures which are demonstrated to be harmful, then perhaps you don't need to.

To explain how I got to those conclusions, we will:

Before we go further, a disclosure: I co-founded Lightwork Home Health, a home health assessment company that helps people evaluate their lighting, air quality, water quality, and, yes, EMF exposure (and more). As such, I have an economic interest in this matter.

With that said, I want to be clear about the causal direction: I co-founded Lightwork after becoming convinced by the evidence of these types of environmental toxicities. That's why I started the business. This research isn't a post-hoc way for me to justify Lightwork's existence!

And regardless, my purpose here to do my best to present the evidence fairly, including the strongest skeptical arguments and what would change my mind, and let you draw your own conclusions.

Here's a fundamental challenge: you can't "prove" causation with 100% certainty in modern observational science.

You can't run a randomized controlled trial where you assign people to use or not use cell phones for 30 years and see who gets brain cancer. You can't ethically differentially expose children to power lines and track their leukemia rates. For most environmental exposures, definitive experimental proof in humans is impossible.

So how did we conclude that tobacco causes lung cancer? That asbestos causes mesothelioma? That lead damages children's brains? None of these had randomized controlled trials in humans either.

The answer is: we use a framework for evaluating causation from imperfect evidence.

In 1965, epidemiologist Austin Bradford Hill proposed nine criteria (or "viewpoints") for assessing whether an observed association is likely to be causal. These criteria have become a common framework in environmental and occupational health:

No single criterion is necessary or sufficient (except temporality is considered necessary). You weigh the totality of evidence across all nine. Hill himself emphasized this:

"None of my nine viewpoints can bring indisputable evidence for or against the cause-and-effect hypothesis... What they can do, with greater or less strength, is to help us make up our minds."

This is, for example, how we decided smoking causes cancer. Not because any single study was definitive, but because the evidence accumulated across multiple criteria: strong associations, consistent across populations, dose-response relationships, biological plausibility from animal studies, coherence with disease patterns.

These criteria are especially helpful for considering causality for questions that are very hard to measure directly / experimentally. Say, for example, "what are the effects of long-term / lifetime mobile device & WiFi use (i.e. RF-EMF exposure) on humans?"

This is basically an unanswerable question in a direct, experimental, RCT-style way. As mentioned elsewhere, you can't isolate certain people from RF-EMF exposure their whole life, and expose other roughly-equivalent people, and look at what happens to each group.

RF-EMF exposure is inevitable and omnipresent in the modern world. You could put people in a no-EMF chamber for a day vs. not and measure things, but you can't directly measure long-term exposure's effects.

So instead, we use something like the Bradford Hill viewpoints and ask: when we consider observational human studies, direct experimental animal studies, plausibility of biological mechanisms, and other characteristics of knowledge all together... what does it say in aggregate?

I'll return to these criteria after presenting the EMF evidence.

Most modern scientific studies use a methodology called null hypothesis significance testing (NHST). Understanding what it does, and doesn't, tell you is also crucial here.

The NHST process:

Here's the critical part: "not statistically significant" does not mean "no effect exists."

It means: given our sample size, measurement precision, and study design, we couldn't rule out that the observed data is consistent with no effect.

I know that sounds a little incoherent and pedantic. But it's a really, really important point for understanding modern science. If you are looking at a study run with NHST (basically all of them now), there are two possible outcomes:

The second point by itself is not strong evidence for no effect existing! Said another way: absence of evidence is not evidence of absence, and absence of certainty of harm is definitely not certainty of absence of harm.

You could have an "absence of evidence" finding for a number of reasons, including:

NHST was designed to prevent false positives, to stop people from claiming effects that don't exist. It was not designed to prove safety. It cannot prove safety. That's not what it does.

So when you read "Study finds no significant link between X and Y," what the study actually found was: "we couldn't reject the null hypothesis." That's not the same as "we proved X doesn't cause Y."

At the same time, repeated null findings with narrow confidence intervals can be evidence against effects above some size. A well-powered study can't "prove safety," but it can rule out large or moderate risks under the exposure definition it actually measured.

Throughout this post, I will show the results of NHST studies in a typical format for this topic, for example:

OR 2.22 (1.69-2.92)

What does this mean?

OR stands for Odds Ratio. It is a common way of evaluating increased or decreased risk for disease. An OR of more than 1 means an increased risk over baseline; an OR of 1 means no increased risk; an OR of less than one means decreased risk (referred to as a "protective effect").

Odds Ratio is actually a little bit tricky to build intuition for. Relative Risk is a related but different metric, but can generally only be calculated with access to the entire population, whereas Odds Ratio can be calculated just by sampling a subset of the population.

More specifically, the Odds Ratio is equal to: the ratio of exposed people who got the disease to exposed people who didn't, divided by the ratio of unexposed people who got the disease to unexposed people who didn't.

For example, if:

The OR would be: (10/90) / (7/193) = 3.06

For our purposes, you can roughly think of this as a "tripling" of your likelihood of getting cancer if exposed to that carcinogen. That's not quite precise, and statisticians would be upset with me for saying so1. But for rare disease like cancer, this is fine for intuition purposes.

Okay so that's the OR number. Then, in parentheses, I had (1.69-2.92), this is the 95% confidence interval (CI).

A 95% confidence interval means that, under the model and sampling assumptions, this range would contain the true value 95% of the time. More casually (and slightly less precisely), we are saying we are 95% sure that the OR is between 1.69 and 2.92, and the point estimate (typically the maximum-likelihood estimate from a regression) is 2.22.

This then leads to an understanding of "statistical significance." A result is only considered statistically significant if the 95% CI does not include the null hypothesis. This makes sense intuitively: if we're 95% sure the result falls in some range, but that range includes "there's no effect," we shouldn't claim that we're confident there is an effect.

In the case of Odds Ratios, the null hypothesis is 1, because "no effect" means "no increased chance over baseline," and that's the definition of an OR of 1.

So that means that if you see a confidence interval that includes 1, the result is not statistically significant, no matter how high the front number is. e.g. if a study reports:

OR 4.75 (0.54-9.12)

That is not a statistically significant finding (1 is in the range 0.54-9.12), despite the fact that it seems to say there's an OR of 4.75.

On the other hand, if a study reports:

OR 1.33 (1.07-1.66)

That is a statistically significant finding of a 1.33 Odds Ratio (roughly 33% increased risk, with the caveats above), with 95% confidence that the true Odds Ratio is between 1.07 and 1.66.

The more data you have and the cleaner / less biased the data is, the closer your measured OR will get to the true value, and the tighter your CI will get around it. The less good data you have, the wider that confidence interval will be (which of course means it is harder to get to statistical significant, if there is an underlying effect).

Note that positive or negative findings that are not statistically significant can still be useful, especially in the context of meta-analyses, which pool multiple similar studies. If a study just happened to be underpowered to find an effect size, when it is pooled, it could help push the overall finding to significance.

Finally: it's important to recognize that relative risk and absolute risk are two very different things. When we're talking about ORs, we're talking about the odds of getting a disease increasing. If that disease is rare in the first place (like tumors we'll discuss in this piece), even if you face a moderately increased odds ratio, it's still rare to get the disease! It's just less rare than it was before. But it doesn't automatically turn the rare disease into a common epidemic.

In the interest of evaluating the Bradford Hill viewpoints, we will first look at animal studies, then human epidemiological studies, and then some proposals on biological mechanisms.

Once we've done so, we'll be able to go through the criteria on the basis of that corpus of evidence and weigh it.

We laid out our principal question earlier:

Is there reason to believe that RF-EMF, at exposure levels and durations typical in modern life, may cause increased risk of adverse health effects, at least for sensitive members of the population?

Like I said, we'll focus mostly on cancer risk as that is the most studied endpoint. So really, for most of the post we'll be focusing on:

Is there reason to believe that RF-EMF, at exposure levels and durations typical in modern life, may cause increased risk of cancer, at least for sensitive members of the population?

And we will answer that question by looking at animal studies, epidemiological studies, and biological mechanisms, and then applying the Bradford Hill criteria to that data.

First, we'll consider: is there evidence of RF-EMF carcinogenicity in animals at relevant exposure levels? For this question, we don't need to look very hard.

In 2018, the U.S. National Toxicology Program (NTP) released results from a $30 million, decade-long study, the most comprehensive, rigorous experimental animal assessment of cell phone radiation ever conducted2 (I discuss what led to this study in an earlier post).

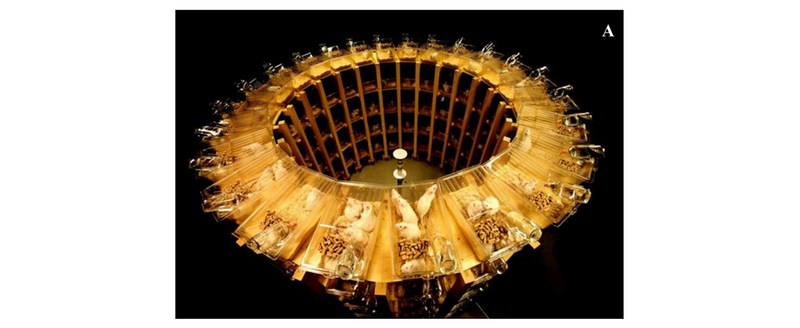

The NTP study exposed rats and mice to cell phone RF-EMF frequencies with common cellular signal modulation schemes (albeit from the early 2000s, since that is when the study was designed). The animals were exposed to levels around and above (although in the same order-of-magnitude) current human limits for 9 hours/day, 7 days/week, for roughly two years (a lifetime for rats). The study was carefully designed, with controlled exposure chambers and sham-exposed concurrent controls.

The NTP uses a standardized evidence scale: "clear evidence," "some evidence," "equivocal evidence," "no evidence." They found:

Remember these tumor categories, schwannomas and gliomas, for when we get to the epidemiological evidence.

"Clear evidence" is the highest confidence rating NTP assigns, and is not something they say lightly. Their definition of it is:

Clear evidence of carcinogenic activity is demonstrated by studies that are interpreted as showing a dose-related (i) increase of malignant neoplasms, (ii) increase of a combination of malignant and benign neoplasms, or (iii) marked increase of benign neoplasms if there is an indication from this or other studies of the ability of such tumors to progress to malignancy.

And "Some evidence" is defined as:

Some evidence of carcinogenic activity is demonstrated by studies that are interpreted as showing a test agent-related increased incidence of neoplasms (malignant, benign, or combined) in which the strength of the response is less than that required for clear evidence.

Both of those categories are considered by the NTP to be "positive findings."

They also published followup papers, including one looking at DNA damage, which found significant increases in DNA damage in: the frontal cortex of the brain in male mice, the blood cells of female mice, and the hippocampus of male rats.

The NTP studies are among the strongest experimental signals in the literature, and are rightfully seen as extremely compelling evidence for carcinogenicity (in animals) of RF-EMF at levels on the same order as human exposure. And as they conclude3:

Under the conditions of this 2-year whole-body exposure study, there was clear evidence of carcinogenic activity of GSM-modulated cell phone RFR at 900 MHz in male Hsd:Sprague Dawley® SD® rats based on the incidences of malignant schwannoma of the heart. The incidences of malignant glioma of the brain and benign, malignant, or complex pheochromocytoma (combined) of the adrenal medulla were also related to RFR exposure. The incidences of benign or malignant granular cell tumors of the brain, adenoma or carcinoma (combined) of the prostate gland, adenoma of the pars distalis of the pituitary gland, and pancreatic islet cell adenoma or carcinoma (combined) may have been related to RFR exposure.

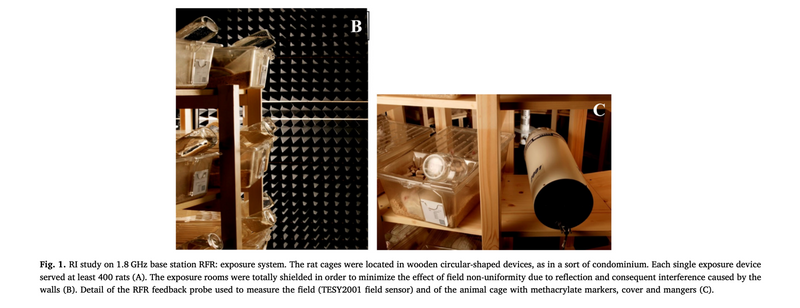

The Ramazzini Institute in Italy, a respected independent toxicology laboratory, then conducted a parallel study examining cell radiation, seeking to reproduce or counter the NTP's results.

They referred to this setup as "wooden circular-shaped devices, as in a sort of condominium", not the sort of condominium I want to live in, whether RF-EMF harm is real or not!

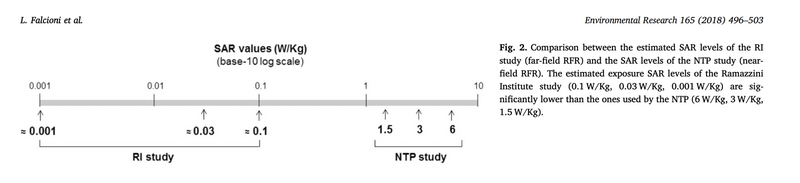

They subjected rats to GSM-modulated RFR at 1.8GHz over the course of their lives. However, they subjected them to much lower exposures. The NTP exposure was at Specific Absorption Rates (SAR) ranging from 1.5 to 6 W/kg to simulate near-field exposure (like having a cell phone against your head). The Ramazzini exposures were orders of magnitude less, from roughly4 0.001 to 0.1 W/kg, to simulate far-field exposure (like being exposed to a cell phone base station or a device further from you).

They found:

And so they conclude:

The RI findings on far field exposure to RFR are consistent with and reinforce the results of the NTP study on near field exposure, as both reported an increase in the incidence of tumors of the brain and heart in RFR-exposed Sprague-Dawley rats.

So, critically, they found at minimum a statistically significant increase in malignant heart schwannomas, which was one of the key findings of the NTP study. And remember that the Ramazzini exposures were 50-1,000 times lower than NTP, within the range people experience simply living near cell towers.

In science, independent replication is everything. When two labs find overlapping unusual results without coordination, it's very unlikely to be a methodological artifact. And these were two independent, top-tier labs, in different countries, looking at different but adjacent exposures, and finding the same rare tumor types.

There are other studies that suggest other effects from RF-EMF on animals. But for the sake of brevity, I will focus on just findings related to those above (as I believe they have the strongest evidence).

Conveniently, the WHO recently commissioned a systematic review of animal cancer bioassays: Mevissen et al. (2025), Environmental International. The review found that across the 52 publications included, after evaluating for risk of bias, there was evidence that RF-EMF exposure increased the incidence of cancer in experimental animals, with:

This is exactly in line with (and partially driven by) the NTP & Ramazzini findings. I have not seen much dispute over these conclusions.

There are many other animal studies on RF-EMF (including several interesting ones around RF-EMF increasing tumor rates when animals are also exposed to separate known carcinogens).

But because I believe the conclusions above (high certainty of evidence for an association in experimental animals between RF-EMF exposure and gliomas/schwannomas) is relatively uncontroversial, I will leave it here, as this minimum bar is sufficient to make an overall argument. Any additional study inclusion would be for purposes of increasing the scope of potential RF-EMF biological impacts, which we can reserve for a future post.

Now, let's turn to the human studies on RF-EMF. Unlike rats, we can't put humans in isolated experimental "wooden circular-shaped condominiums" and blast them with RF-EMF or sham controls for their entire life. So instead, we rely on observational studies, typically either of the case-control variety, or prospective (the differences between which we will discuss shortly).

This difference is why we want to look at both animal and human studies (as well as other evidence): because it is only in concert that we can get a fuller picture.

There is also a challenge with RF-EMF in particular, as opposed to many other potential toxins: it is really, really hard to get accurate measurements of exposure. In the animal trials, we could look precisely at a quantified SAR (basically absorbed radiation) or field strength (ambient radiation), because the animals were in isolated, shielded chambers. But human exposure is a whole different beast.

Your phone transmits at variable strengths (fewer bars of signal actually means the phone boosts its radio and outputs higher power!), you move through a varying ambient field all day (are you close to a WiFi router? a cell tower?), and many ways of cutting this rely on some degree of human recall (how long were you on your phone?). Plus: cellular technology is constantly changing, so the same behavior one year may have a totally different exposure profile than the next.

This creates a huge amount of noise and challenge in the data. Studies take different approaches, and we will attempt to look at them individually and in totality. But it is worth bearing these challenges in mind. In general, most studies tend to focus on simply "cell phone usage," with many studies tracking time spent on calls. Especially at large enough scale, this is perhaps the best way to get at exposure, but still leaves questions and noise.

The most noteworthy case-control cell phone study is generally considered to be the INTERPHONE study. The main paper was published in 2010, and there have been a number of followups and country-specific ones as well.

INTERPHONE was coordinated by the WHO's cancer research arm (IARC), and looked at people across 13 countries. They found tumor cases between 2000-2004 and matched controls from the same populations, giving both detailed interviews about cell phone use history. It involved 2,708 glioma cases and 2,409 meningioma cases.

Their headline finding was that there was no overall association between cell phone use and gliomas or meningiomas ("No elevated OR [Odds Ratio] for glioma or meningioma was observed ≥10 years after first phone use."). However: when you read the details, a more nuanced picture emerges.

The details

As the WHO's International Agency for Research on Cancer (IARC; the organization that kicked off the study) said themselves in 2010 when the study results were announced:

The majority of subjects were not heavy mobile phone users by today's standards. The median lifetime cumulative call time was around 100 hours, with a median of 2 to 2-1/2 hours of reported use per month. The cut-point for the heaviest 10% of users (1,640 hours lifetime), spread out over 10 years, corresponds to about a half-hour per day.

You may wonder, as I did: for those "heaviest 10% of users" who spend more than 1,640 lifetime hours over 10 years, averaging "about a half-hour per day"... what was their outcome?

You wouldn't have guessed it from the topline "no elevated odds ratio" conclusion, but:

In the tenth [highest] decile of recalled cumulative call time, ≥1,640 h, the OR was 1.40 (95% CI 1.03-1.89) for glioma

Said another way: there was a statistically-significant 40% increased odds ratio of glioma in this set of "heavy users."

Even more than that, in this same "heavy usage" group, the OR for temporal lobe (the part of the brain most adjacent to where you hold your phone) glioma was 1.87 (95% CI 1.09-3.22). This means there was a statistically-significant 87% increased risk of temporal lobe gliomas for heavy users over people that were not regular users of their phones (i.e. they used them less than once per week!).

And even more interesting than even that: you might wonder, for this high-usage set, was there a difference in which side of the brain the tumor appeared on? And indeed, there was. Of those with glioma, "ipsilateral" phone use (i.e. mostly holding your phone on the same side of your head as where a tumor developed) came with an OR of 1.96 (95% CI 1.22-3.16) versus "contralateral" phone use (i.e. mostly holding your phone on the opposite side of your head from the tumor) at 1.25 (95% CI 0.64-2.42).

Said another way: there was a strong, statistically significant correlation between which side of their heads the glioma participants held their phone on and which side their tumor was on.

Now most people I know use their mobile phones, on a call or in their hand browsing, maybe 5 hours a day (various online stats agree with that order of magnitude). That's 150 or so hours a month, or 1,800 hours a year. INTERPHONE considered "heavy users" to be those with more than 1,640 hours of lifetime exposure.

But critically, as we will address later in this piece, holding a phone up to your ear (really the only way people used them in the 90s' INTERPHONE usage era) is a very different and more intense exposure profile than holding it in your hand. So I'm not suggesting at all that modern usage hours are simply a scaled-up version of the INTERPHONE usage.

In fact, if that were the case, cancer numbers would be off the charts right now, we'll also discuss this dynamic later.

But the point remains: 1,640 hours of lifetime exposure feels well within reach for a "normal" user today, not just a "heavy" one. And the INTERPHONE study suggests that range has a statistically significant association with glioma, and especially with ipsilateral, temporal lobe glioma.

A reasonable question may arise: am I cherry-picking here? I'm only talking about the highest-usage group (more than 1,640 hours of lifetime cell phone use), when all the lower-usage groups showed much less, or zero, or negative, impact from cell phone usage.

Well: I think that group is especially relevant to our original question. We're trying to figure out whether "exposure levels and durations typical in modern life" have negative health effects. The highest-usage group in the INTERPHONE study used their phones on average about half an hour a day.

Imagine we were studying whether eating ultra-processed food (UPF) was correlated with obesity, and we found a big, well-run study that looked at food consumption of the following groups over 10 years:

And imagine that study found that for Group 6, there was a statistically-significant 40% increased risk of obesity over Group 1, but for Groups 2-5, there was no statistically significant increase. And on that basis, we said "no elevated OR for obesity was observed ≥10 years after first ultra-processed food consumption."

That's all well and good, but, uh, ultra-processed food now accounts for nearly 60% of US adult calorie consumption. So most people are way into the top group, and as such, that's the only one that is particularly relevant to look at.

And that maps almost exactly to what the INTERPHONE study.

To be clear, I don't blame the INTERPHONE authors. They conducted their study based on cell phone usage at the time, the late 1990s and early 2000s. And in their press release when they released the study, they said:

Dr Christopher Wild, Director of IARC said: "An increased risk of brain cancer is not established from the data from Interphone. However, observations at the highest level of cumulative call time and the changing patterns of mobile phone use since the period studied by Interphone, particularly in young people, mean that further investigation of mobile phone use and brain cancer risk is merited."

(That quote also hints at our later consideration of "sensitive groups", INTERPHONE only looked at adults, not children.)

There are other critiques of the study (including shortcomings the investigators themselves highlight, and many others published): it was short latency (most cases had only a few years exposure; brain tumor latency is commonly decades), they found an implausible protective effect at low exposure (OR < 1.0) which signals systematic bias pushing results towards null, there were clearly recall errors and possible selection biases, and more.

Additionally and importantly, this will come up again later in our analysis of the evidence, the INTERPHONE study only looked at cellular phone use as "exposure" but not cordless phone use. At the time the study was done, DECT cordless phones were common, and they also emit RF-EMF. But the INTERPHONE study put usage of those devices in the "not exposure" bucket. So when they then compared the "more exposed" groups to the "less exposed" groups, it was not a proper comparison of RF-EMF exposure. The study measured the association of "cellular phone usage" with cancer, but not "RF-EMF exposure" to cancer (and this cordless exclusion statistically biased the study towards the null).

But at the end of the day, when looking at the data (not just the conclusions), I find the INTERPHONE study a persuasive argument in favor of glioma correlation with what might be in the range of typical modern cell phone usage. And that's even before we look at other epidemiological studies like...

Swedish researcher Lennart Hardell has conducted a series of studies over two decades. He's careful to include deceased cases, detailed laterality analyses, long followups (some 25+ years), cordless phone exposure (not just cellular phone), and dose-response analysis.

To take one of his papers as a representative example (Hardell & Carlberg (2015), Pathophysiology, a pooled analysis of two of their studies):

There are several other Hardell studies that find similar results. We'll cover their aggregate outcomes in the meta-analyses section below.

The laterality finding is crucial to addressing a possible source of bias (both here and in the INTERPHONE study). If recall bias drove these results, people with tumors overreporting phone use, they'd likely end up overreporting on both sides. There's no reason to misremember which ear you used.

But increased risk appears specifically on the side where phones were held. That's exactly what you'd expect from a true biological effect, and is strongly suggestive of a lack of bias (although there remain possibilities around differential recall of side, post-diagnosis rationalization, etc.).

With that said, Hardell's studies are often contested. His work consistently finds larger effects than other research groups, which is a clear driver of scrutiny.

He has also done a lot of studies (more than anyone else on this topic), and depending on your view on the matter, this ends up biasing meta-analyses (which we'll cover in a moment) one way or the other. Either Hardell's studies are included in the meta-analysis, which drives the association between RF-EMF and cancer up, or they are excluded, which weakens the association substantially, or even eliminates it.

Critics point to several concerns, most of all his effect sizes not being replicated by other major studies (i.e. an elevated association found in a study is correlated with that study being performed by Hardell). These concerns should be taken into account when weighing the evidence.

In contrast to findings above, there are two large-scale studies that are often cited as counterpoints: the Million Women Study in the UK, and the Danish cohort study.

The Million Women Study is a very impressive large-scale project. 1.3 million UK women were recruited and followed for health outcomes. Papers on cellular phone exposure were produced, including Schüz et al. (2022), J Natl Cancer Inst.. The core finding:

Adjusted relative risks for ever vs never cellular telephone use were 0.97 (95% confidence interval = 0.90 to 1.04) for all brain tumors, 0.89 (95% confidence interval = 0.80 to 0.99) for glioma, and not statistically significantly different to 1.0 for meningioma, pituitary tumors, and acoustic neuroma. Compared with never-users, no statistically significant associations were found, overall or by tumor subtype, for daily cellular telephone use or for having used cellular telephones for at least 10 years.

So: no association found. However (emphasis mine):

In INTERPHONE, a modest positive association was seen between glioma risk and the heaviest (top decile of) cellular telephone use (odds ratio = 1.40, 95% CI = 1.03 to 1.89). This specific group of cellular telephone users is estimated to represent not more than 3% of the women in our study, so that overall, the results of the 2 studies are not in contradiction

There are, as usual, a variety of letters and rebuttals (Moskowitz, Birnbaum et al., Schüz). I find arguments on various points persuasive on both sides in terms of methodological strengths and weaknesses5.

The study authors themselves, in their reply letter, say:

We do agree, however, with both Moskowitz and Birnbaum et al. that our study does not include many heavy users of cellular phones. This study reflects the typical patterns of use by middle-aged women in the UK starting in the early 2000s.

...

Overall, our findings and those from other studies support our carefully worded conclusion that "cellular telephone use under usual conditions [Schüz et al.'s emphasis] does not increase brain tumor incidence." However, advising heavy users on how to reduce unnecessary exposures remains a good precautionary approach.

And so I repeat here: their view of "usual conditions" may or may not be in line with modern RF-EMF usage; and their suggestion that "advising heavy users on how to reduce unnecessary exposures remains a good precautionary approach" is a suggestion that by their own definitions, should likely apply to most people today.

The Danish cohort study (Frei et al. (2011), BMJ) is another oft-cited study. It looked at Danish cell phone subscribers in the early 1990s and followed up regarding their cancer status between 1990-2007. They found no statistically-significant increased risk for brain / central nervous system tumors.

But this study falls victim to some of the same issues as the prior ones. The Danish study did not measure actual exposure / usage. Instead, they simply looked at the binary of whether the individual had a cellular subscription or not, so there was no way to stratify by usage. They excluded corporate subscribers from the "exposed" group, only had data on mobile phone subscriptions until 1995, and treated cordless / DECT phone users as "unexposed." Perhaps most importantly, "the weekly average length of outgoing calls was 23 minutes for subscribers in 1987-95 and 17 minutes in 1996-2002."

So, again: another study that does not feel strongly informative about the tail of exposure most relevant today.

The most recent large-scale study is the Cohort Study on Mobile Phone Use and Health, or COSMOS, which remains in-progress (Feychting et al. 2024, Environ Int. is the most recent relevant follow-up).

COSMOS is a prospective cohort study following 250,000 mobile phone users recruited between 2007-2012 in several counties across Europe. The headline finding is no association between mobile phone use and risk of glioma, meningioma, or acoustic neuroma.

COSMOS was designed specifically to account for shortcomings in the earlier studies. Most of all, the two most relevant earlier study series (INTERPHONE & Hardell's) were case-control studies, meaning roughly that researchers found people with cancer, then found matching controls, and then asked them all about their cell phone usage.

Whereas COSMOS is a prospective study, which means that they simply started following a population, and will note both cell phone usage over time as well as cancer diagnoses. The intention here is to address what is referred to as "recall bias." The concern is that people diagnosed with brain tumors, searching for explanations, might unconsciously overestimate their historical phone use6.

The COSMOS study, as it stands today, has somewhat limited statistical power (especially for certain tumor types like acoustic neuroma), and also is limited by the followup duration (roughly 7 years)7. There are only 149 glioma, 89 meningioma, and 29 incident cases of acoustic neuroma. These limitations are not a failure of the study design, simply a commentary on the fact that it is early on.

Overall, I find the COSMOS study to be a very thoughtfully-designed approach, with compelling early findings of no association between mobile phone use and the cancers they are looking at. There are limitations, as with every study, but they address them well. I will be watching them closely for followups. This study is one of two places, along with population cancer trends, that I think has the strongest chance of causing me to update my conclusions on this matter.

But, despite all of that, we must remember what we outlined at the outset. Absence of evidence of an association is not evidence of absence. There is no such thing as a single study "disproving" an effect with certainty. It could be that there is no effect, but it could also be that the study is underpowered for the effect size, the followup period was too short, there was noise, or otherwise.

We've now gone through several of the biggest / most noteworthy human studies on the association between cell phone use and cancer. And as you can see, at least from my perspective, the topline conclusions do not always line up with the most relevant takeaway for our purposes today: assessing the impact of typical usage patterns.

An important piece of context is that Karipidis et al. isn't a meta-analysis. For a variety of reasons they did not feel comfortable performing a pooled meta-analysis and instead chose to do a systematic review with risk-of-bias / GRADE scoring to come to conclusions on certainty of evidence8. So it is also just a different approach to looking at the data than the meta-analyses are.

The latest WHO-commissioned systematic review, Karipidis et al. (2024), Environ Int., concludes, among other findings:

For near field RF-EMF exposure to the head from mobile phone use, there was moderate certainty evidence that it likely does not increase the risk of glioma, meningioma, acoustic neuroma, pituitary tumours, and salivary gland tumours in adults, or of paediatric brain tumours.

There are then several meta-analyses that effectively come to the conclusion that general usage is not associated with increased risk, but long-term / heavy use (and ipsilateral use) is:

Myung et al. (2009), Journal of Clinical Oncology:

Prasad et al. (2017), Neurological Sciences:

Yang et al. (2017), PLOS ONE:

Moon et al. (2024), Environmental Health:

Choi et al. (2020), Int J Environ Res Public Health sought to break down some of the variance in results. A key finding:

In the subgroup meta-analysis by research group, cellular phone use was associated with marginally increased tumor risk in the Hardell studies (OR, 1.15 (95% CI, 1.00 to 1.33; n = 10; I2 = 40.1%), whereas it was associated with decreased tumor risk in the INTERPHONE studies (OR, 0.81; 95% CI, 0.75 to 0.88; n = 9; I2 = 1.3%). In the studies conducted by other groups, there was no statistically significant association between the cellular phone use and tumor risk (OR, 1.02; 95% CI, 0.92 to 1.13; n = 17; I2 = 8.1%).

However, Choi et al. also looked at duration and found that for users with >1,000 hours of lifetime call time:

So: what's the takeaway from all these meta-analyses? At a high level: that the evidence is heterogeneous; that there doesn't seem to be an association between general phone use and cancer, but that there may be one with longer-term and especially ipsilateral use. Those conclusions are contested, and the literature continues to evolve.

If you recall from the NTP and Ramazzini animal studies above, the tumors they found were schwannomas and gliomas. Schwannomas arise from Schwann cells (the cells forming myelin sheaths around nerves). Gliomas arise from glial cells in the brain.

These are the exact same cell types implicated in human studies: Acoustic neuromas (vestibular schwannomas), tumors of the hearing nerve, and Gliomas, the most common malignant brain tumor.

The animal studies found heart schwannomas as opposed to vestibular ones, although this isn't necessarily surprising, since the animal studies used full-body radiation rather than the narrower "phone to head" radiation of the epidemiological studies. But it's the same cell type. And the gliomas overlap directly.

Finding the same rare tumor types in the tissues where the radiation was delivered, between controlled animal experiments and large-scale observational studies, is very strong evidence.

Now that we've looked at animal and human studies on the carcinogenicity of RF-EMF, we can turn to studies and proposals on the biological mechanisms that may lead to cancer.

This is, of course, tricky. Cancer is a multistage process. You can contribute to carcinogenesis lots of different ways: oxidative stress, disrupted cell signaling, impaired DNA repair, chronic inflammation, or otherwise.

Often when this topic is brought up, people take up the physics argument that non-ionizing radiation lacks the energy to break chemical bonds, which means that this sort of RF-EMF we are examining can't do direct DNA damage via ionization (which would be an obvious carcinogenic path). This is true.

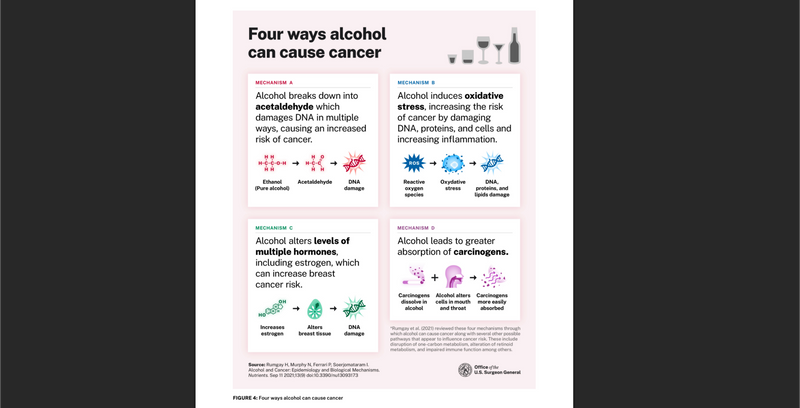

But there are of course plenty of established carcinogens that don't work through direct ionization. Here is, for example, a page from a U.S. Surgeon General's Advisory on Alcohol and Cancer risk:

Alcohol can cause cancer through breaking down acetaldehyde, through inducing oxidative stress, through endocrine disruption, and through amplifying the effects of other carcinogens. Plus even more pathways are proposed in the cited paper (Rumgay et al. (2021), Nutrients).

It is common for carcinogenic substances to have multiple pathways that contribute to cancer risk. This may also be the case with RF-EMF. Here we'll review a few of the plausible mechanisms by which RF-EMF may affect human biology and physiology.

There are a lot of studies that have been run, both in vivo and in vitro, on the impact of RF-EMF on oxidative stress markers. In general, my view is that when looking at a mechanism like this, because we are seeking an effect that can be reasonably generalized (or generally rejected) to apply to our Bradford Hill criteria of causality evaluation, it is best to look at the corpus in aggregate rather than point at individual studies.

The WHO-commissioned review on oxidative stress, Meyer et al. (2024), Environ Int., found the evidence effectively equivocal:

The evidence on the relation between the exposure to RF-EMF and biomarkers of oxidative stress was of very low certainty, because a majority of the included studies were rated with a high RoB level and provided high heterogeneity

They don't come to any conclusions with anything beyond "low certainty," citing high risk-of-bias and high heterogeneity between the studies. There have been many studies; why could they not get to certainty? On this front, I find the ICBE-EMF group's critique of the paper very compelling (Melnick et al. (2025), Environ Health). Here are some excerpts (although I suggest reading the whole critique if you are interested9, in addition to the original paper of course):

The ICBE-EMF group's critique raises several compelling points about excessive exclusion of studies. Of the 897 articles that Meyer et al. considered eligible, only 52 were included in their meta-analyses; 360 studies were excluded because the only biomarker reported was claimed to be an invalid measure of oxidative stress.

Going the other direction, Yakymenko et al. (2015), Electromagn Biol Med found:

Analysis of the currently available peer-reviewed scientific literature... indicates that among 100 currently available peer-reviewed studies dealing with oxidative effects of low-intensity RFR, in general, 93 confirmed that RFR induces oxidative effects in biological systems

The paper I wish was a systematic review is Schuermann & Mevissen (2021), Int J Mol Sci. Their conclusion is quite favorable to the idea that RF-EMF can cause oxidative stress (emphasis mine):

In summary, indications for increased oxidative stress caused by RF-EMF and ELF-MF were reported in the majority of the animal studies and in more than half of the cell studies.

...

A trend is emerging, which becomes clear even when taking methodological weaknesses into account, i.e., that EMF exposure, even in the low dose range, may well lead to changes in cellular oxidative balance

Also, despite all of this, there are several findings in the paper that report statistically significant impacts, even given the authors' definitions and criteria. These then get rolled into overall "low certainty" total evidence assessments, but the findings stand, including10:

This evidence, for me, is sufficient regarding the mechanistic plausibility of RF-EMF leading to biological effects that can be upstream of cancer.

Cell membranes are observed to contain ion channels that respond to voltage changes, Voltage Gated Ion Channels (VGICs). One proposal for a potential molecular mechanism for biological effects of EMF is the aberrant activation of these channels, causing inappropriate calcium influx (specifically in Voltage Gated Calcium Channels, or VGCCs).

This is a polarizing and hotly disputed perspective. Some researchers say it is central; others dispute the relevance at typical exposure levels11. It has primarily been advanced by Martin Pall, Ph.D., beginning with Pall (2013), J Cell Mol Med.

If true that RF-EMF can cause inappropriate calcium influx, it would not be surprising to see downstream effects that could lead to tumor development. Excess calcium influx is known to trigger nitric oxide production, highly-reactive peroxynitrite formation, and free radical cascades and downstream oxidative stress.

But if the VGCC sensors are being impacted by the fields generated by coherently oscillating in-channel ions (less than a nanometer away from them), that means far less field strength is required to "tip" the sensor, as opposed to an RF-EMF source from outside the body. They argue that the RF-EMF (or more precisely its related ELF/ULF modulation fields), due to its anthropogenic polarized/coherent character, can force ions to move in tandem, and those ions then naturally emit their own field which impacts the sensors12.

There has been recent interesting work advancing this theory, namely Panagopoulos et al. (2025), Front Public Health. They propose further that the perceived impacts of RF-EMF are actually not due to the carrier waves (in the RF-EMF spectra), but rather the Extremely Low Frequency / Ultra Low Frequency EMF that come with them in the form of their modulation, pulsation, and variability characteristics.

Aside from oxidative stress, the NTP follow-up study (Smith-Roe et al. (2019), Environ Mol Mutagen), as noted earlier, found significant DNA strand breaks in certain organs of exposed animals, not through direct ionization, but through indirect mechanisms.

More broadly, Weller et al. (2025), Front Public Health performed a recent scoping review and evidence map study of RF-EMF genotoxicity and found that across 500 studies:

The evidence map presented here reveals statistically significant DNA damage in humans and animals resulting from man-made RF-EMF exposures, particularly DNA base damage and DNA strand breaks. The evidence also suggests plausible mechanistic pathways for DNA damage, most notably through increased free radical production and oxidative stress. Sensitivity to damage varied by cell type, with reproductive cells (testicular, sperm and ovarian) along with brain cells appearing particularly vulnerable.

...

Overall, there is a strong evidence base showing DNA damage and potential biological mechanisms operating at intensity levels much lower than the ICNIRP recommended exposure limits.

There are also other proposed mechanisms, including gene expression impacts (see Lai & Levitt (2025), Rev Environ Health).

But overall, my goal with this biological plausibility section is to address the "physics impossibility" argument that assumes direct ionization is the only mechanism by which EMF could impact humans. But biology is more complex than that. Multiple plausible mechanisms have been identified and replicated across laboratories.

In the scientific literature on EMFs, there are a lot of accusations of funding source bias and other ad-hominem attacks on people's perspectives. After much debate, I have decided not to spend time analyzing any of this in this essay.

Conflicts and biases can undoubtedly be real. But from my perspective, the reason you would need to discuss them is if you believe someone is lying or faking data. But if you're willing to assume that the data is real, then I think it is much better to simply look in detail at the data and the methodologies, as we have done above, and draw your own conclusions.

I'm choosing to view all authors mentioned in this piece as good faith contributors to the discourse. I have no direct reason to suspect otherwise. And we will simply look at the reported details and draw our own conclusions.

Before we go to our Bradford Hill assessment of the evidence, let's cover the skeptical arguments, as they should be factored in.

As discussed in the mechanisms section: direct ionization (and thermal effects) aren't the only pathways to biological harm. Oxidative stress, ion channel disruption, and indirect DNA damage are all plausible mechanisms with experimental support. There are likely others out there we will discover as well!

Moreover, by way of simple analogy: there are clearly systems in our body that are sensitive to non-ionizing, non-thermal electromagnetic radiation in other parts of the spectrum, like blue light. Based on the evidence, I think the burden is on the side arguing "this can't possibly have any physical effects" rather than the side saying that it could.

First of all: I believe that in the US, the authorities have taken a fundamentally flawed approach (and are currently under an appeals court order to further explain their reasoning or update it). For more, see my prior article on the flawed assumption of US regulation of RF-EMF.

The heart of the matter is that they have explicitly taken the stance that they do not need to protect from non-thermal effects of RF-EMF, despite the positive findings of the NTP study that was commissioned for exactly that reason (among others).

But beyond that, the global authorities are not actually harmonized on this matter. Ramirez-Vazquez et al. (2024), Environ Res. found:

The international reference levels established by ICNIRP are also recommended by WHO, IEEE and FCC, and are adopted by most countries. However, some countries such as Canada, Italy, Poland, Switzerland, China, Russia, France, and regions of Belgium establish more restrictive limits than the international ones.

There are also more specific precautionary measures that have been taken, including France and Israel's bans on WiFi in nurseries and mandates that it be minimized in schools.

This reflects a fundamental misunderstanding of how science works. Going back to the beginning of this piece:

A null finding is not evidence of no effect.

You can't average a positive finding with a non-finding and conclude "the truth is in the middle." The non-finding might just reflect a study that wasn't capable of detecting the effect.

If I were cherry-picking random noise, you'd expect the positive findings to be scattered incoherently, different tumor types, different exposure patterns, no biological logic.

Instead, the positive findings cluster in ways that make sense:

This is why coherence is one of the Bradford Hill criteria we are looking at.

This is the vibes argument, and I get it. I felt it too. But an ad-hominem is not a sufficient reason to dismiss the evidence. The scientific evidence exists independently of who's talking about it.

This is, to me, the strongest skeptical evidence-based argument against RF-EMF carcinogenicity, and the one I'm most interested to watch unfold over the coming years.

Population incidence trends of glioma and other cancers have remained quite stable, and certainly lower than would be expected if a large percentage of the population faced exposure levels similar to the "heavy users" of the INTERPHONE and other epidemiological studies and suffered an OR of 1.4 or so. This is why I find the argument compelling.

On the other hand, there exist data to support some incidence trends (particularly possible increases in temporal lobe glioma), and many confounding factors (latency, usage patterns changing, data challenges, diagnostic changes, etc.).

As de Vocht (2021), Bioelectromagnetics notes:

Ecological data [note: incidence trends are an example of this] are generally considered weak epidemiological evidence to infer causality, and the presented data provide little evidence to confirm or refute mobile phone use or RF radiation as a cancer hazard.

By way of analogy on another exposure: cigarette consumption per capita in the US rose from 54/year to 4,345/year at its peak in 1963 (National Cancer Institute). But lung cancer rates didn't peak until the early 1990s. If you were a regulator in 1935, a couple decades into mass adoption of cigarettes, you could have pointed to relatively "flat" cancer rates as evidence that smoking was safe. But you would have been wrong.

There's an interesting paper from Sato et al. (2019), Bioelectromagnetics where they simulated the effects of heavy usage in Japan:

Under the modeled scenarios, an increase in the incidence of malignant brain tumors was shown to be observed around 2020.

Said another way: we are just entering the period where (at least according to their assumptions), increased incidence of tumors might begin to be detected. This is the latency issue; even though mobile phones boomed in the 90s and 2000s, one would not expect an immediate rise in cancer diagnoses13.

My overall take on this skeptical argument is that it is strong, and moreover, it is the most important place for us to continue to look if we want to falsify a prediction of RF-EMF carcinogenicity.

We've now looked at the studies and responded to some skeptical points. So let's go back to our Bradford Hill viewpoints on causality and briefly touch on each.

I'm going to apply each viewpoint specifically to the question:

Is there reason to believe that RF-EMF, at exposure levels and durations typical in modern life, may cause increased risk of cancer, at least for sensitive members of the population?

In the context of what were defined as heavy users in the early-2000s (but I now view as typical usage): Moderate. But for an overall association shown between RF-EMF exposure and cancer: Weak.

In the highest exposure strata, reported effects are often in the ~1.2-1.6 range (sometimes higher depending on definitions and inclusion), which is moderate. In animals (NTP, Ramazzini), the signal for certain tumors is meaningfully stronger.

Mixed to moderate.

In favor of consistency: similar directions show up repeatedly when you look specifically at longer duration / heavier cumulative use (and laterality), across multiple countries and in multiple meta-analyses.

But opposed: results vary a lot by study design, by exposure definition, by comparison group, and by inclusion/exclusion choices in reviews.

On the animal studies, however, there is Moderate (or perhaps even Strong) consistency on the observed endpoints.

Moderate. There's tumor-type specificity across lines of evidence; human concern clusters around glioma and schwannoma-derived tumors; anatomical specificity around temporal-lobe proximity (aligning with near-field exposure).

Strong. When we observed a signal in the epidemiological studies, it tended to be in longer-latency categories, which is coherent with carcinogenesis and temporality.

Mixed to Moderate. We do see repeated evidence of high cumulative call-time bins showing higher risk, but we also don't see smooth dose-response gradients going up along the way.

Moderate to Strong. I see the oxidative stress evidence as quite compelling for biological plausibility of a mechanism that is known to be upstream of carcinogenesis.

Mixed. On one hand, we have very strong positive evidence for coherence in the overlap between animal study tumors and epidemiological observations, the same rare tumors appearing in both. But at the same time, we have very weak coherence between many of the studies and the population incidence trends.

Strong for animals; Weak for humans (and we probably can't have it any other way).

Mixed. Reasonable analogies exist: other non-ionizing exposures can have meaningful biological effects without direct ionization; environmental carcinogens often show early signals in subsets before the story becomes "obvious."

My read is not "Bradford Hill = slam dunk, RF-EMF carcinogenicity in humans at typical levels is proven, QED."

It's more like "there's enough across experiments + mechanistic plausibility + patterned epidemiologic signals to treat RF-EMF as credible hazard," while also admitting that the human epidemiology is heterogeneous and the population-trend tension means we should be cautious about claiming a large, universal risk increase under modern conditions.

We've focused this post on the potential carcinogenic effects of RF-EMF usage, because that is where the greatest body of evidence exists. But there are certainly other proposed endpoints with evidence of their own.

Sperm are outside the body, temperature-sensitive, and highly motile, which makes them a very strong biological sensor. We've also, in the last couple decades, started to carry around phones in our pockets close to the testes.

Adams et al. (2014), Environ Int.:

Exposure to mobile phones was associated with reduced sperm motility (mean difference -8.1% (95% CI -13.1, -3.2)) and viability (mean difference -9.1% (95% CI -18.4, 0.2)), but the effects on concentration were more equivocal. The results were consistent across experimental in vitro and observational in vivo studies. We conclude that pooled results from in vitro and in vivo studies suggest that mobile phone exposure negatively affects sperm quality.

Houston et al. (2016), Reproduction:

Among a total of 27 studies investigating the effects of RF-EMR on the male reproductive system, negative consequences of exposure were reported in 21. Within these 21 studies, 11 of the 15 that investigated sperm motility reported significant declines

Kim et al. (2021), Environ Res.:

Mobile phone use decreased the overall sperm quality by affecting the motility, viability, and concentration. It was further reduced in the group with high mobile phone usage. In particular, the decrease was remarkable in in vivo studies with stronger clinical significance in subgroup analysis. Therefore, long-term cell phone use is a factor that must be considered as a cause of sperm quality reduction.

On pregnancy/birth outcomes, the evidence base is smaller, and much of it is animal-experimental rather than human-observational. The WHO-commissioned review on animals (Cordelli et al. (2023), Environ Int.) showed moderate certainty evidence for a small detrimental effect on fetal weight, but very low certainty for most other endpoints.

If the standard is "high-certainty proof of harm at real-world exposures," we are not there. But if the standard is "is there enough reason to avoid unnecessary exposure during pregnancy when it's easy to do so?", I think a reasonable person may say yes based on this data.

There are a variety of other endpoints with suggestive early evidence:

Sleep: Bijlsma et al. (2024), Front Public Health ran a small double-blind, placebo-controlled crossover study and found:

Sleep quality was reduced significantly (p < 0.05) and clinically meaningful during RF-EMF exposure compared to sham-exposure as indicated by the PIRS-20 scores. Furthermore, at higher frequencies (gamma, beta and theta bands), EEG power density significantly increased during the Non-Rapid Eye Movement sleep (p < 0.05).

Appetite: Wardzinski et al. (2022), Nutrients found:

Exposure to both mobile phones strikingly increased overall caloric intake by 22-27% compared with the sham condition. Differential analyses of macronutrient ingestion revealed that higher calorie consumption was mainly due to enhanced carbohydrate intake... Our results identify RF-EMFs as a potential contributing factor to overeating, which underlies the obesity epidemic.

Stroke risk: Jin et al. (2025), Medicine (Baltimore) found a significant causality between duration of mobile phone use and increased risk of large artery atherosclerosis (OR = 1.120; 95% CI = 1.005-1.248; P = .040), though findings were barely significant and other stroke subtypes showed no association.

We've gone through this whole essay implicitly, and occasionally explicitly, carrying with us an important, undefined phrase: "at exposure levels and durations typical in modern life."

Let's look at a single person. Their RF-EMF "exposure" is really the sum of two sub-exposures: near field exposure and far field exposure.

Near field exposure comes from RF-EMF sources "close by" to you. Far field comes from those further away. You can think of the line between those classifications of exposure as roughly 1/6th the wavelength14 of the wave in question. In the near field, there are strong inductive and capacitive effects of the electromagnetic field, and in the far field, these effects are less pronounced.

This means that near field effects come from sources (like cell phones) that are within inches (or less) of your body. Far field effects come from anything further away.

And moreover, there are a ton of other variables that have shifted over the past decades as mobile phones have gotten popular:

5G is a particularly interesting case of uncertainty. There are arguments that it could be better from a public health perspective and arguments that it could be worse, but it is undoubtedly different from the generations before (and its effects on humans have effectively not been studied due to how new it is).

A lot of 5G deployments overlap with 4G, at 0.6-6GHz. But the newer "millimeter wave" deployments can be an order of magnitude higher, between 24-40GHz.

5G introduces beamforming technology. With pre-5G networks, a base station was always broadcasting a relatively broad pattern. With 5G, the base station uses arrays of antenna elements to point energy directly towards your phone. This could be better from an exposure perspective (you aren't being "washed" in the field all the time), or worse (beamforming can create higher localized intensities in the direction of the user).

For an overview, see Simkó & Mattsson (2019), Int J Environ Res Public Health, which looked at 94 publications and found:

Eighty percent of the in vivo studies showed responses to exposure, while 58% of the in vitro studies demonstrated effects. The responses affected all biological endpoints studied. There was no consistent relationship between power density, exposure duration, or frequency, and exposure effects. The available studies do not provide adequate and sufficient information for a meaningful safety assessment, or for the question about non-thermal effects.

We haven't examined "sensitivity" in detail, largely because most of the studies performed look at population-level exposures and because the most obviously-sensitive population (children) are even more out-of-bounds for direct experimentation.

There are at least two categorical reasons that sensitive populations could be more meaningfully affected:

Gandhi et al. (2012), Electromagn Biol Med. suggests:

The SAR for a 10-year old is up to 153% higher than the SAR for the SAM model. When electrical properties are considered, a child's head's absorption can be over two times greater, and absorption of the skull's bone marrow can be ten times greater than adult

That is: the measured near field energy absorbed by a child can be dramatically higher than what an adult absorbs. This means that the same phone, or iPad, could result in significantly more radiation exposure for a child than their parent. In fact, if Gandhi et al. are correct, it could be that children using mobile devices would actually absorb more energy than is allowed by the regulatory limits, as those limits are (remarkably) measured assuming the exposed individual is a 220 lb, 6'2" male.

I would conjecture that it is possible that many people's lifetime RF-EMF exposure has gone up and down very much non-monotonically. Perhaps:

Without a better understanding, we are just shooting in the dark, and the applicability of historical studies to our modern exposures is in question.

This is, to me, perhaps the scariest and strongest point for precaution. We have, as I see it, little to no idea what we are actually exposed to right now or how it might be affecting us.

If you've gotten this far, you deserve an award. Thanks for reading.

I wish I could come out of all this analysis with a dead-simple conclusion like "RF-EMF at modern exposure levels is likely increasing glioma risk by X%," or "RF-EMF at modern levels is likely to be safe."

And yet, I don't believe either of those are appropriate interpretations of the data. Here's my best shot at summing up my conclusions:

The weight of scientific evidence supports that there is reason to believe that RF-EMF, at exposure levels typical in modern life, may increase the risk of adverse health effects.

But also: there is immense uncertainty, and it is not clear how applicable historical studies are to our modern exposure levels given constantly changing characteristics of the exposure profile (this could cut either way, positive or negative).

We are effectively running an uncontrolled experiment on the world population with waves of new technology which have no robust evidence of safety.

If you believe new exposures should be demonstrated to be safe before being rolled out to you, you should take precautions around RF-EMF exposures (which have not been demonstrated to be safe).

If you only believe precautions are warranted for exposures which are demonstrated to be harmful, then perhaps you don't need to.

I'm not saying "EMFs definitely cause cancer in everyone" (that's not how environmental health science works anyway). I'm saying "there is substantial, coherent evidence of increased risk, particularly with long-term heavy exposure, and significant uncertainty given the ever-evolving landscape, and the current regulatory posture of 'assumed safe' is not supported by the science.

"No (or uncertain) evidence of harm" is not the same as "evidence of no harm" or "evidence of safety."

What frustrates me most about some of the debate around this topic is when people (not everyone!) point to weak, inconsistent, or no evidence for specific harm, and use that to say that it is safe. That is not a rigorous approach. It needs to be looked at in totality.

We saw similar patterns with tobacco, lead, and asbestos. Arguments were made that "the science isn't settled" or "we need more research" or "the mechanisms aren't fully understood", and in the meantime, exposures continued.

The precautionary principle says: when there's evidence of the possibility of serious potential harm, prudent action is not to wait for certainty. It is to take precautions.

Last: it's possible to hold two ideas in your head at once: that these technologies have broadly been a net good for the world due to productivity gains and you believe they may have negative health consequences and you want to minimize your own exposure.

On carcinogenicity specifically, there are two areas I will be watching closely:

If ecological studies and prospective studies like COSMOS do not show increases in endpoint outcomes over the coming decade or two that would be consistent with RF-EMF causing harm (ipsilateral, temporal lobe gliomas; possibly endpoints associated with phones being in pockets15, etc.), especially among heaviest-user cohorts, that would weaken the argument.

This is not meant to be a "how to protect yourself from EMFs" post. And I'm not suggesting we abandon modern technology (I use a cell phone and am writing this essay on WiFi at a local coworking space, although with wired headphones and wired computer peripherals!).

But in general, the lowest-hanging fruit:

These precautions are mostly cheap or free and have no real downside. Reducing EMF exposure is a low-cost hedge against a possibly high-consequence risk.

We live in a society where lots of people drink alcohol, and most everyone is aware it ain't great for you and that they should weigh the tradeoffs. Some abstain. Most drink in moderation. Why couldn't RF-EMF be treated the same?

Disclosure: I co-founded a company called Lightwork Home Health, a home health assessment company that helps people evaluate their lighting, air quality, water quality, and, yes, EMF exposure (and more). As such, I have an economic interest in this matter. I co-founded Lightwork after becoming convinced by the evidence of these types of environmental toxicities. That's why I started the business. This research isn't a post-hoc way for me to justify Lightwork's existence!

I'll be working on Part II: ELF-MF. If you have any comments in the meantime, I'm all ears.